Introduction

Large Language Models (LLMs) have revolutionized the field of Natural Language Processing (NLP) by enabling generative, interactive, and task-oriented AI applications. These models, trained on vast amounts of textual data, have emerged as foundational tools for various domains, from chatbots to content generation. This article provides a detailed overview of the key principles underlying LLMs, including pre-training, generative modeling, prompting techniques, and alignment methodologies.

Pre-training in Large Language Models

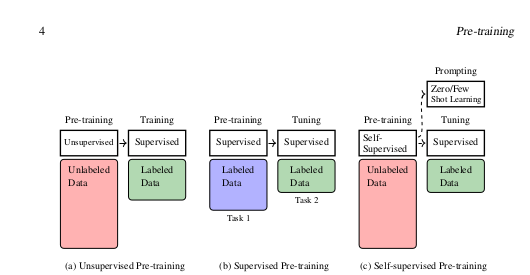

Pre-training forms the backbone of modern LLMs. The process involves training a neural network on massive datasets using self-supervised learning techniques. There are three main types of pre-training approaches:

- Unsupervised Pre-training – The model is trained on unlabeled text, learning patterns and language structures without explicit task labels.

- Supervised Pre-training – The model is trained on labeled datasets where human-provided annotations guide its learning.

- Self-supervised Pre-training – A hybrid approach where the model generates its own supervision signals, such as masked language modeling (e.g., BERT) or next-token prediction (e.g., GPT).

These pre-training paradigms help LLMs acquire general linguistic capabilities, which can later be fine-tuned for specific tasks.

Generative Models

LLMs function primarily as generative models, predicting the next token in a sequence based on prior context. This process follows the probabilistic formulation:Pr(y∣x)=argmaxyP(y∣x)Pr(y|x) = \arg\max_y P(y | x)Pr(y∣x)=argymaxP(y∣x)

where xxx represents the input sequence and yyy denotes the predicted output. This autoregressive nature allows models to generate coherent, contextually relevant text. Popular generative architectures include:

- Decoder-only Transformers (e.g., GPT) – Efficient for text generation, translation, and conversation.

- Encoder-only Transformers (e.g., BERT) – Used for classification, sentiment analysis, and text embedding.

- Encoder-Decoder Models (e.g., T5, BART) – Ideal for tasks requiring context understanding and response generation.

Prompting Techniques

Prompting is an essential mechanism for controlling LLM behavior without retraining. It involves crafting input instructions that elicit desired responses. Prominent prompting methods include:

- Basic Prompting – Providing a direct instruction, such as “Translate this sentence into French.”

- In-context Learning (ICL) – Demonstrating task execution via examples within the prompt.

- Chain-of-Thought (CoT) Prompting – Encouraging step-by-step reasoning by appending instructions like “Let’s think step by step.”

- Zero-shot and Few-shot Prompting – Using minimal or no examples to guide model responses.

Advanced prompting techniques, such as soft prompts (learned embeddings instead of text-based prompts) and ensemble prompting (combining multiple prompts), further enhance LLM performance.

Alignment of Large Language Models

The Challenge of Alignment

LLMs must align with human values, expectations, and ethical considerations. This process is complex due to the diverse and evolving nature of human preferences. Poorly aligned models may generate biased, harmful, or misleading content.

Alignment Strategies

- Supervised Fine-tuning (SFT) – Training on task-specific labeled datasets to ensure adherence to user instructions.

- Learning from Human Feedback – Using expert-annotated responses to refine model behavior.

- Reinforcement Learning from Human Feedback (RLHF) – Training an LLM using a reward model that assigns scores based on human preference rankings.

- Direct Preference Optimization (DPO) – An alternative to RLHF that directly optimizes models based on ranked human preferences.

Alignment ensures that LLMs not only generate accurate and useful outputs but also adhere to ethical standards.

Conclusion

Large Language Models are at the forefront of AI innovation, driven by pre-training, generative modeling, effective prompting, and careful alignment. While they offer transformative capabilities, challenges remain in improving their accuracy, ethical considerations, and adaptability. Continued research in these areas will refine LLMs, making them more robust and beneficial to society.

This article serves as a foundational guide to understanding LLMs and their key components. As advancements continue, further exploration into more efficient architectures, improved alignment techniques, and novel prompting strategies will shape the future of AI-driven language models.