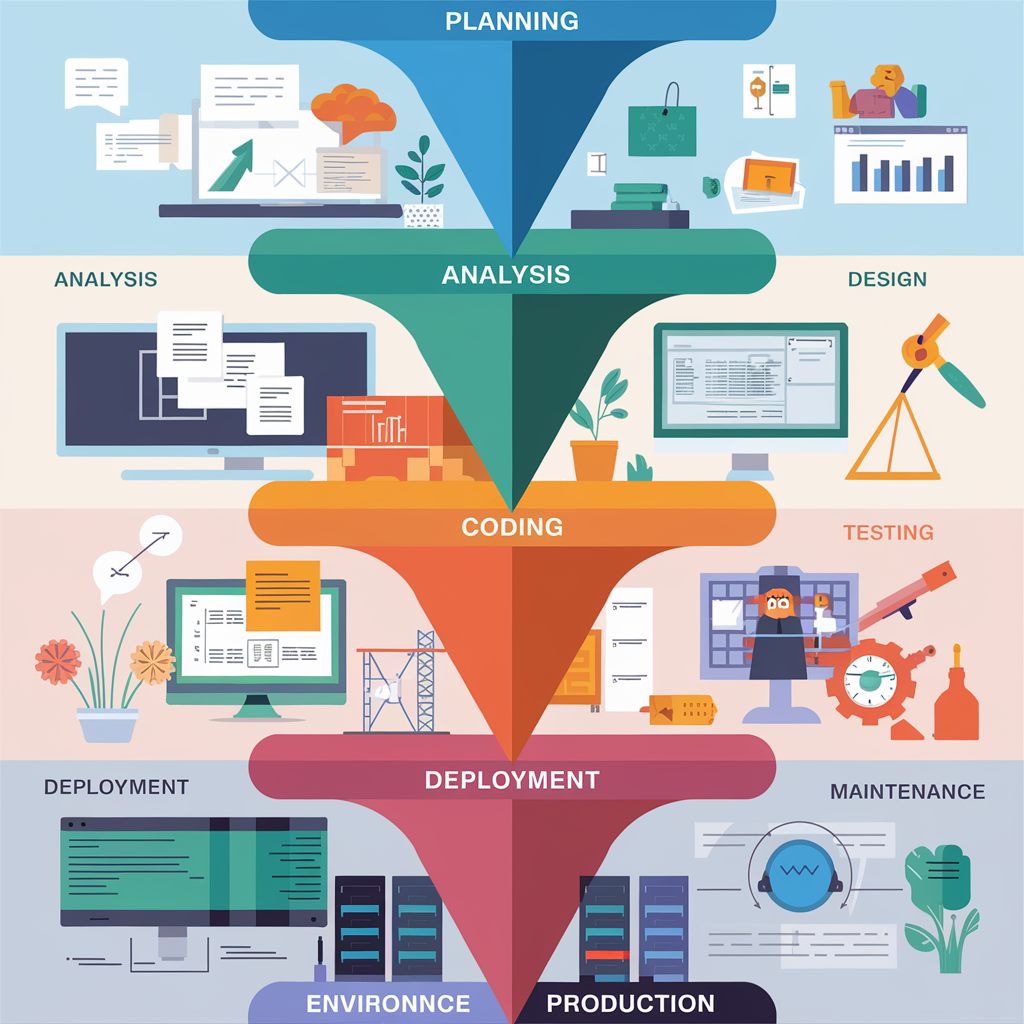

One of the most frequently debated topics in software engineering is how many environments are necessary to support a smooth and reliable software development lifecycle (SDLC). There is no universal answer—what works for one team may not suit another. Some companies opt for minimal environments, while others use a more complex ecosystem with many environments like staging, pre-production, and beta.

Striking the right balance between too few and too many environments can be difficult. Environments are key to ensuring software is developed, tested, and released safely, but managing too many can slow down delivery and increase costs. On the other hand, having too few environments can create bottlenecks, lead to bugs escaping into production, and reduce the ability to fully test and validate software under real-world conditions.

The purpose of this post is to explore the different types of environments commonly used across the SDLC, understand their specific roles, and assess the teams or individuals responsible for accessing each environment. Additionally, we will examine best practices and tooling to optimize environment management and streamline the release process.

1. The Core SDLC Environments

Each environment in the SDLC plays a distinct role in progressing software from development through testing to production. The following sections break down the essential environments, along with the profiles that have access to them and their responsibilities.

1.1. Local Environment

- Purpose: The local environment is where individual developers write and test code before submitting it to shared environments. It typically includes the developer’s personal machine and mirrors the necessary parts of the development stack, such as databases or microservices, either natively or via containerization.

- Roles with Access:

- Developers: Responsible for writing, testing, and debugging code in their local environment.

- DevOps: Occasionally provides configurations for containerized environments like Docker to ensure local environments match higher environments.

- Tooling:

- IDEs: Visual Studio Code, IntelliJ IDEA

- Containerization: Docker, Vagrant

- Version Control: Git, GitLab

- Local databases and dependency management

- Best Practices:

- Use the same versions of libraries, dependencies, and databases as higher environments.

- Ensure easy setup using containerization to prevent “works on my machine” issues.

- Developers should write unit tests before pushing changes to the next environment.

1.2. Development (Dev) Environment

- Purpose: The development environment is a centralized, shared space where developers integrate their code, conduct initial testing, and collaborate with the team. This environment typically includes access to version control, build servers, and automated test tools.

- Roles with Access:

- Developers: Check in code and test integration with other team members’ work.

- DevOps Engineers: Set up and manage the continuous integration/continuous delivery (CI/CD) pipeline.

- QA Testers: Perform basic functional tests, although more comprehensive tests occur in later environments.

- Business Analysts (BA) and Product Owners (PO): Occasionally review early features to provide feedback.

- Tooling:

- CI/CD Tools: Jenkins, GitLab CI, CircleCI

- Version Control: GitHub, Bitbucket

- Container Orchestration: Kubernetes, Docker Compose

- Best Practices:

- Commit frequently to ensure continuous integration.

- Automate unit tests and ensure they pass before promoting code to higher environments.

- Use Gitflow or trunk-based development for effective version control and branch management.

1.3. Build Integration Environment

- Purpose: This environment focuses on compiling and integrating code. It allows teams to catch integration errors early, ensuring that the code compiles and is ready for testing. The build integration environment often triggers through automated CI pipelines and is usually separated from actual feature testing.

- Roles with Access:

- Developers: Can view the results of build pipelines to ensure successful compilation.

- DevOps Engineers: Manage build tools and ensure that build processes are fast and reliable.

- Tooling:

- Build Tools: Jenkins, Maven, Gradle

- CI Tools: Travis CI, TeamCity

- Static Code Analysis: SonarQube

- Best Practices:

- Enforce automated build verification with integration tests.

- Fail fast: Ensure quick feedback to developers when integration issues arise.

- Provide clear error logs to help developers quickly resolve build issues.

1.4. System Test Environment

- Purpose: The system test environment (also known as SIT) allows teams to test the complete integrated system. This environment tests how various system components work together and ensures the application meets the overall business and technical requirements.

- Roles with Access:

- QA Engineers: Perform functional, integration, and regression tests.

- SRE (Site Reliability Engineers): Monitor and optimize system performance in real-time scenarios.

- Developers: Collaborate with QA to fix bugs detected during testing.

- Business Analysts: Ensure that system functionalities align with business requirements.

- Tooling:

- Test Automation: Selenium, JUnit, Postman (for API testing)

- Monitoring: Prometheus, Grafana for real-time system performance monitoring

- CI Tools: Jenkins for automated test execution

- Best Practices:

- Automate as much testing as possible to ensure rapid feedback.

- Establish clear entry and exit criteria before moving to the next environment.

- Use real-time monitoring to analyze system behavior under load.

1.5. User Acceptance Testing (UAT) Environment

- Purpose: UAT is where the business stakeholders or end-users validate the software to ensure it meets their needs and the original requirements. It’s often the final validation step before a release candidate is approved for production.

- Roles with Access:

- End Users: Test the software to ensure it meets their needs and expectations.

- Business Analysts: Coordinate the testing process, facilitate feedback loops, and translate user feedback into actionable changes.

- Product Owners: Validate the product against user stories and sign off on the release.

- Tooling:

- UAT Platforms: TestRail, Zephyr

- Feedback Management: Jira, Azure DevOps

- Best Practices:

- Keep the UAT environment as close to production as possible in terms of data, infrastructure, and configuration.

- Ensure proper sign-offs are documented before promoting changes to staging or production.

- Use realistic data and include the same permissions and roles as would be in production.

1.6. Staging Environment

- Purpose: The staging environment serves as the final step before production. It is often a replica of the production environment and is used for comprehensive final testing, including performance, security, and regression tests. Staging is where release candidates are validated under production-like conditions.

- Roles with Access:

- Developers: Review the final build and ensure that all features are implemented correctly.

- QA Engineers: Conduct final smoke tests to verify critical functionality.

- SREs: Test the infrastructure under load and ensure system resilience.

- DevOps Engineers: Verify the deployment processes and ensure smooth transitions to production.

- Tooling:

- Monitoring: ELK stack, Grafana

- Security Testing: SonarQube, OWASP ZAP

- Performance Testing: Apache JMeter, LoadRunner

- Best Practices:

- Execute final performance and stress tests here before production.

- Use blue-green deployments or canary releases to minimize the impact of potential bugs on end-users.

- Test monitoring and alerting configurations to ensure they function as expected in production.

1.7. Pre-Production Environment

- Purpose: The pre-production environment is often used for final rehearsals and checks, ensuring that everything is ready for launch. This environment may also be used for compliance and security checks, depending on the nature of the business.

- Roles with Access:

- SREs: Perform final infrastructure checks and ensure the system is ready for scaling in production.

- QA Engineers: Validate that the system has passed all required testing phases.

- Business Analysts: Review final functionality and conduct any last-minute validations.

- Compliance and Security Teams: Conduct audits and checks to ensure compliance with regulations and security protocols.

- Tooling:

- Compliance Tools: Veracode, Checkmarx

- Security Testing: Penetration testing tools (Metasploit, Burp Suite)

- Best Practices:

- Perform dry-run deployments and rehearsals to ensure smooth releases.

- Ensure security and compliance requirements are met, particularly for industries with strict regulatory frameworks.

- Document and sign off on final approvals before moving to production.

1.8. Production Environment

- Purpose: The production environment is the live system where real end-users interact with the application. The primary goal here is to ensure high availability, security, and performance.

- Roles with Access:

- SREs: Monitor system health, optimize performance, and handle incidents in real time.

- DevOps Engineers: Manage infrastructure, automate deployments, and scale services as needed.

- Developers: Have limited or no direct access, except for emergency fixes.

- End Users: Interact with the system, providing feedback through usage and support channels.

- Tooling:

- Monitoring: Datadog, New Relic, ELK stack

- Deployment: Kubernetes, Spinnaker, Jenkins (CI/CD)

- Incident Management: PagerDuty, OpsGenie

- Best Practices:

- Limit access to production, especially for developers, to ensure system integrity and security.

- Implement strict monitoring and alerting systems to detect any performance degradations or issues in real-time.

- Follow blue-green or canary deployments to minimize risk during updates.

2. Supporting Environments and Their Purpose

While the core environments are essential for a stable SDLC, supporting environments add additional layers of validation and testing, ensuring that the application is robust, performant, and resilient to various real-world conditions. These environments often target specific scenarios like stress testing, training, and failure handling.

2.1. Sandbox Environment

- Purpose: A sandbox environment is an isolated space used for experimentation and testing without affecting other environments. Developers can use sandboxes to test new technologies, try out features, or simulate specific scenarios without impacting development or production systems.

- Roles with Access:

- Developers: Create and test experimental features or proof-of-concept work.

- QA Engineers: Run tests on new features or configurations that are not yet stable.

- DevOps Engineers: Experiment with new infrastructure or configuration management tools.

- Tooling:

- Virtualization and Containerization: Docker, Vagrant, Kubernetes

- Infrastructure as Code (IaC): Terraform, Ansible

- Best Practices:

- Ensure the sandbox environment is isolated to prevent accidental changes to shared resources.

- Allow developers and QA teams to experiment freely while encouraging them to bring successful tests into development environments.

- Regularly refresh sandboxes to reflect any changes in production-like setups.

2.2. Performance Test Environment

- Purpose: This environment is designed specifically to evaluate the performance of the system under various conditions. It is used for load testing, stress testing, and benchmarking to ensure that the application can handle expected (and unexpected) traffic and workloads.

- Roles with Access:

- Performance Engineers: Design and execute load and stress tests.

- SREs: Analyze the system’s performance under load and identify bottlenecks.

- DevOps Engineers: Simulate production-like conditions to measure infrastructure scalability and resource consumption.

- Tooling:

- Load Testing Tools: Apache JMeter, LoadRunner

- Performance Monitoring: New Relic, Datadog, Grafana

- Best Practices:

- Test with realistic user loads, including peak and spike traffic scenarios.

- Measure key performance indicators (KPIs) such as response time, throughput, and error rates.

- Ensure that load tests reflect the geographical distribution and concurrency levels expected in production.

2.3. Chaos Environment

- Purpose: Chaos engineering environments are used to deliberately introduce disruptions to test the system’s resilience. This involves simulating failure conditions such as network outages, server crashes, or service degradation to assess how well the system can recover.

- Roles with Access:

- SREs: Design and execute chaos experiments to identify system weaknesses.

- DevOps Engineers: Manage the infrastructure and tools used to simulate failures.

- Developers: Collaborate with SREs to fix vulnerabilities discovered during chaos tests.

- Tooling:

- Chaos Engineering Tools: Chaos Monkey, Gremlin, LitmusChaos

- Monitoring and Incident Response: Prometheus, Grafana, PagerDuty

- Best Practices:

- Run chaos experiments regularly, but ensure they are well-documented and controlled to prevent unnecessary disruptions.

- Automate chaos experiments to integrate them into the regular CI/CD pipeline.

- Focus on testing critical parts of the infrastructure, such as database failovers and network partitions.

2.4. Beta Environment

- Purpose: A beta environment is a pre-production setup where a subset of real users can test new features before they are fully released to production. This environment helps gather feedback from users in a controlled manner, identifying potential issues or improvements before a full-scale rollout.

- Roles with Access:

- End Users (Beta Testers): Provide real-world feedback on new features or enhancements.

- Product Owners (PO): Manage beta releases, gather feedback, and make decisions on feature readiness.

- Developers and QA Engineers: Monitor user feedback and resolve any issues raised during the beta phase.

- Tooling:

- Feature Flag Tools: LaunchDarkly, Optimizely

- Feedback Management: Jira, Azure DevOps

- Best Practices:

- Use feature flags to control which users can access beta features.

- Engage with beta testers actively to gather meaningful feedback.

- Monitor usage metrics to evaluate the performance and stability of new features in real-world scenarios.

3. Tailoring Environments to Specific Needs

Certain environments cater to specific business or technical requirements, particularly in complex systems or enterprises that must support various user groups, compliance standards, and business needs.

3.1. Multi-Tenant Environment

- Purpose: A multi-tenant environment is used to test applications that serve multiple clients (or tenants) with proper data segregation and resource allocation. This is particularly relevant for SaaS platforms, where a single application serves multiple customers, each with their own datasets and configurations.

- Roles with Access:

- Developers: Test code modifications in tenant-specific contexts to ensure proper data segregation and feature flag handling.

- DevOps Engineers: Configure infrastructure to support multiple tenants while maintaining resource isolation.

- SREs: Ensure the system can scale horizontally to accommodate increasing numbers of tenants without performance degradation.

- Tooling:

- Multi-Tenant Database Partitioning: Postgres schemas, MySQL sharding

- Container Orchestration: Kubernetes for multi-tenant resource isolation

- Best Practices:

- Simulate tenant-specific use cases to ensure there is no data leakage between tenants.

- Design the environment to handle different workloads, configurations, and access controls per tenant.

- Regularly test scalability, as the number of tenants may grow exponentially over time.

3.2. Training Environment

- Purpose: A training environment is set up to onboard new developers, train end-users, or offer support team members a risk-free environment to learn how to use the system. This environment contains realistic data and settings but does not impact production in any way.

- Roles with Access:

- New Developers: Familiarize themselves with the system and its architecture.

- End Users: Learn how to use the system without impacting production data or processes.

- Product Owners: Organize training sessions and provide training materials.

- Tooling:

- Virtualization and Cloud Platforms: AWS Training Sandboxes, GCP, Azure

- Application Simulation Tools: Custom-built simulators for user training

- Best Practices:

- Use realistic, anonymized production-like data to give users an authentic experience.

- Ensure training environments are regularly updated to reflect new features or workflows.

- Implement role-based access controls to ensure that only appropriate users have access to sensitive data.

3.3. Backup Environment

- Purpose: A backup environment is used to test disaster recovery procedures and ensure that backup systems work effectively. This environment simulates production-level backup and restore operations to validate that recovery times meet business requirements.

- Roles with Access:

- SREs: Validate backup integrity and test disaster recovery scenarios.

- DevOps Engineers: Manage backup infrastructure and automation processes.

- Compliance Teams: Ensure backup procedures meet regulatory requirements for data retention and recovery.

- Tooling:

- Backup Solutions: Veeam, AWS Backup, Azure Backup

- Disaster Recovery Tools: Zerto, CloudEndure

- Best Practices:

- Regularly test backup and restore processes to ensure they meet Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO).

- Automate backup verification processes to ensure that backups are complete and consistent.

- Store backups in multiple locations (on-premises and cloud) to avoid a single point of failure.

4. Technical Operating Model (TOM) for Environment Management

Effective management of environments throughout the SDLC requires a clear Technical Operating Model (TOM) to define how different environments interact, who is responsible for each one, and how they are maintained. This section will cover the governance, automation, and monitoring aspects of managing environments effectively.

4.1. Environment Governance

- Defining Responsibilities: Different teams play distinct roles in managing and operating environments. Governance is crucial to ensuring that the environments remain stable, secure, and available for their intended purposes.

- Developers: Focus on code quality, integration, and early testing in development and local environments.

- QA Engineers: Responsible for validating code functionality across multiple environments, from development to UAT and staging.

- DevOps Engineers: Manage CI/CD pipelines, environment provisioning, and infrastructure as code.

- SREs: Monitor system performance, manage production stability, and handle incident response.

- Product Owners and Business Analysts: Collaborate on UAT and staging to ensure the application meets business needs before release.

- Best Practices:

- Clearly define ownership for each environment, from local to production.

- Implement strict access controls, particularly in staging and production, to maintain security.

- Ensure all changes to higher environments are logged and auditable.

4.2. Automation in Environment Management

- Purpose: Automation is critical in managing complex environments at scale, ensuring consistency, reducing human errors, and improving operational efficiency. Automating environment setup, configuration, and monitoring leads to faster development cycles and more reliable systems.

- Tooling:

- Infrastructure as Code (IaC): Terraform, CloudFormation, Ansible for provisioning environments.

- CI/CD: Jenkins, GitLab CI, Spinnaker for automating build, test, and deployment processes.

- Configuration Management: Puppet, Chef for ensuring environments are configured identically.

- Best Practices:

- Use IaC to define and provision environments automatically, reducing manual intervention and configuration drift.

- Automate environment testing as part of the CI/CD pipeline to ensure new changes don’t break existing functionality.

- Implement automated scaling in performance-critical environments like production and performance testing.

4.3. Monitoring and Feedback Loops

- Purpose: Continuous monitoring and feedback loops are essential to maintaining the health of environments, especially in production. Monitoring provides insights into system performance, error rates, and user interactions, while feedback loops help developers and operators address issues in real time.

- Tooling:

- Monitoring Tools: Prometheus, Datadog, ELK Stack (Elasticsearch, Logstash, Kibana)

- Incident Management: PagerDuty, OpsGenie for automated alerting and incident tracking.

- Best Practices:

- Set up continuous monitoring for key metrics like CPU usage, memory, error rates, and response times.

- Ensure feedback loops are integrated into the SDLC so that any issues discovered in testing or production are promptly addressed.

- Implement automated alerting for critical failures in production environments to minimize downtime.

5. Best Practices for Managing Multiple Environments

Managing multiple environments can become complex, particularly when dealing with larger-scale projects or distributed teams. The following best practices help ensure smooth operations and alignment across all environments.

- Environment Parity: Ensure that non-production environments (e.g., staging, UAT) mirror the production environment as closely as possible in terms of infrastructure, configurations, and data. This reduces the risk of issues arising in production that were not caught during testing.

- Data Management: Use anonymized, production-like data in testing environments to simulate real-world scenarios while protecting sensitive information. This enables more accurate testing while maintaining compliance with data privacy regulations.

- Security: Implement role-based access control (RBAC) across environments, ensuring that only authorized personnel have access to sensitive environments like production and staging.

- Release Methodologies: Leverage advanced deployment strategies such as blue-green deployments, canary releases, and feature flags to ensure smooth and risk-mitigated production releases.

- Automation: Automate as much of the environment provisioning, testing, and deployment processes as possible to ensure consistency and efficiency across the SDLC.

Conclusion

The decision on how many environments are needed throughout the SDLC is heavily influenced by the software’s complexity, the size of the development team, and specific business or regulatory requirements. While there is no “one-size-fits-all” solution, a well-thought-out approach can ensure the right balance between agility and stability. By carefully selecting and managing environments, teams can ensure smooth software delivery, minimize risks, and meet both technical and business requirements.

Key takeaways:

- Tailor your environment strategy to the complexity and needs of your project, balancing between too many and too few environments.

- Automate environment management wherever possible to reduce errors and accelerate the development process.

- Enforce strong governance and access control to maintain security, especially in higher environments like production.

By adopting best practices in environment management, teams can streamline the SDLC, deliver higher-quality software, and ensure a seamless transition from development to release.

https://www.unitrends.com/blog/development-test-environments

https://www.techtarget.com/searchsoftwarequality/definition/development-environment