In the modern era, millions of companies use Kubernetes to manage and scale their applications efficiently. As a container orchestration tool, Kubernetes automates software deployment, scaling, and management, enabling seamless global experiences for users. This guide explores Kubernetes architecture, detailing core components and how they work together to run containers at scale.

What is Kubernetes Architecture?

Kubernetes is an open-source system that manages containerized applications across multiple hosts. At its core, Kubernetes is a distributed system that coordinates different parts to streamline application deployment and scaling.

Core Components of Kubernetes Architecture

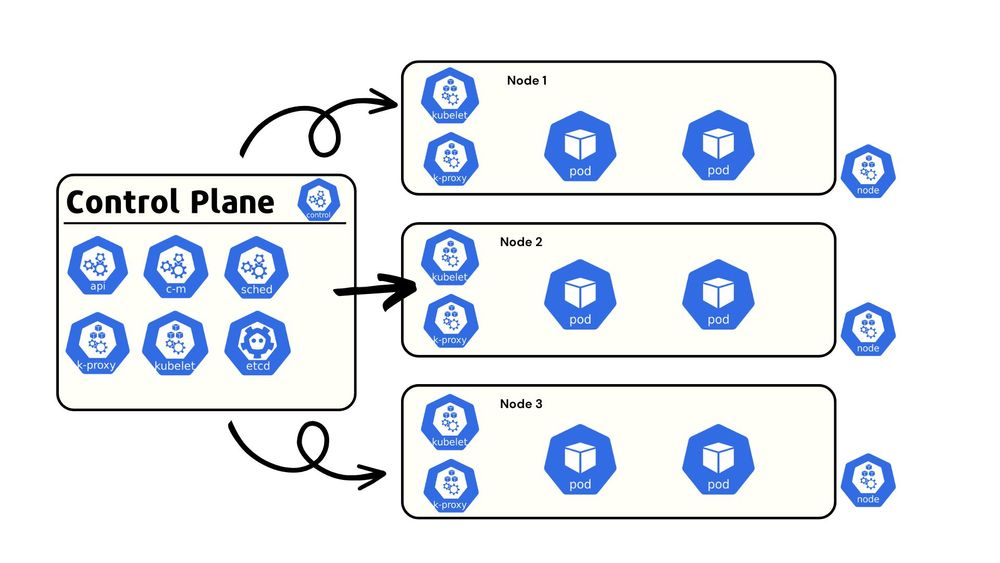

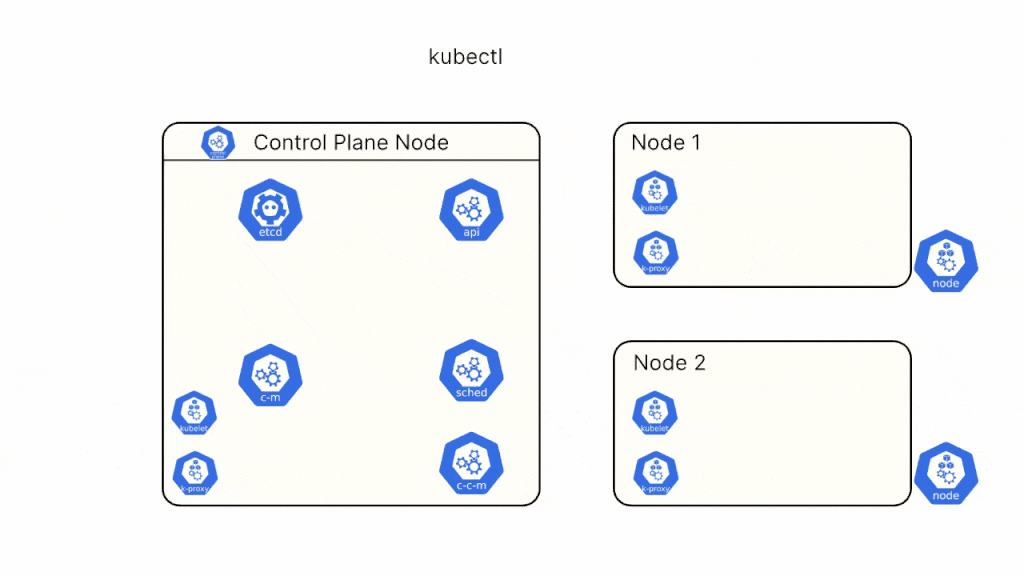

A Kubernetes cluster consists of Nodes (individual machines) that work together to manage workloads. These Nodes are split into two types:

- Control Plane Nodes: The core management layer containing essential components.

- Worker Nodes: Machines designated for running application workloads.

To ensure high availability and fault tolerance, production-ready Kubernetes clusters often have multiple Control Plane Nodes and ETCD databases.

Kubernetes Control Plane Components

The Control Plane houses the essential components necessary for running the cluster. Here’s a look at each of these:

1. API Server

- Acts as the entry point for the Kubernetes cluster.

- Receives and routes requests from external clients and other Control Plane components.

- Authentication and authorization are managed here, with commands (e.g.,

kubectl apply -f) processed and forwarded to the appropriate Kubernetes components.

2. ETCD

- A distributed key-value store that maintains the cluster’s state, including object status and resource usage.

- Only accessible through the API Server to maintain security and consistency.

- Can be configured in stacked or external modes; in production, a High Availability ETCD cluster is preferred for resilience.

3. Scheduler

- Decides where workloads (pods) should run.

- Evaluates available nodes, taking into account resource requirements and any predefined rules, to assign pods to suitable nodes.

4. Controller Manager

- A daemon that contains various controllers for managing specific cluster resources (e.g., Deployment Controller, Endpoint Controller).

- Each controller ensures its respective resources meet the desired state. For instance, the Deployment Controller ensures the correct number of replicas are running.

5. Cloud Controller Manager

- Automates the provisioning of resources (e.g., VMs, network interfaces) on public cloud platforms (e.g., AWS, Azure).

- Manages cloud-related resources and enables Kubernetes clusters to dynamically interact with cloud infrastructure.

Kubernetes Worker Node Components

Worker nodes handle application workloads in the form of pods. Each worker node contains:

1. Kubelet

- Responsible for creating and maintaining pods on its node.

- Runs as a system daemon, ensuring that each node’s applications adhere to specified configurations.

2. Kube Proxy

- Manages networking within the cluster, enabling Kubernetes resources to communicate.

- Runs as a DaemonSet, forwarding traffic to the appropriate IP addresses.

3. Container Runtime

- Runs containers within each pod.

- Kubernetes has transitioned to containerd (after deprecating DockerShim), but supports other CRI-compliant runtimes like CRI-O and Mirantis.

Kubernetes Cluster Workflow: A Deployment Example

Let’s break down what happens in a Kubernetes cluster when a new deployment is created:

- Command Execution: A deployment command (e.g.,

kubectl create deployment --image=nginx --replicas=4) is issued via kubectl, which authenticates with the API Server. - Request Handling: The API Server validates and authorizes the request, recording deployment information in ETCD.

- Controller Notification: The Controller Manager initiates the deployment.

- Pod Scheduling: The Scheduler assigns the deployment’s pods to nodes with available resources.

- Pod Creation: Kubelet on each assigned node creates and configures the pods, while the container runtime launches containers within each pod.

- Status Update: The Scheduler updates the API Server, and ETCD stores the latest status.

Essential Kubernetes Add-ons

To extend Kubernetes functionality, several add-ons are commonly deployed in clusters:

- CNI (Container Networking Interface): Manages cluster networking, supporting policies, load balancing, and more.

- CoreDNS: Facilitates service discovery by mapping services and pods to DNS names.

- Metrics Server: Gathers resource usage data (CPU, memory) for nodes and pods.

- Dashboard: A web-based UI for managing Kubernetes clusters, offering visibility and troubleshooting capabilities.

Conclusion

Kubernetes is a sophisticated orchestration system with a highly extensible architecture. Its core components collaborate to run, manage, and scale containerized applications, while add-ons enhance functionality and monitoring. A strong understanding of Kubernetes architecture is crucial for developers and DevOps professionals alike, enabling them to troubleshoot effectively, optimize workflows, and design applications that leverage Kubernetes’ powerful capabilities.