Table of Contents

- Project Overview

- Technology Stack

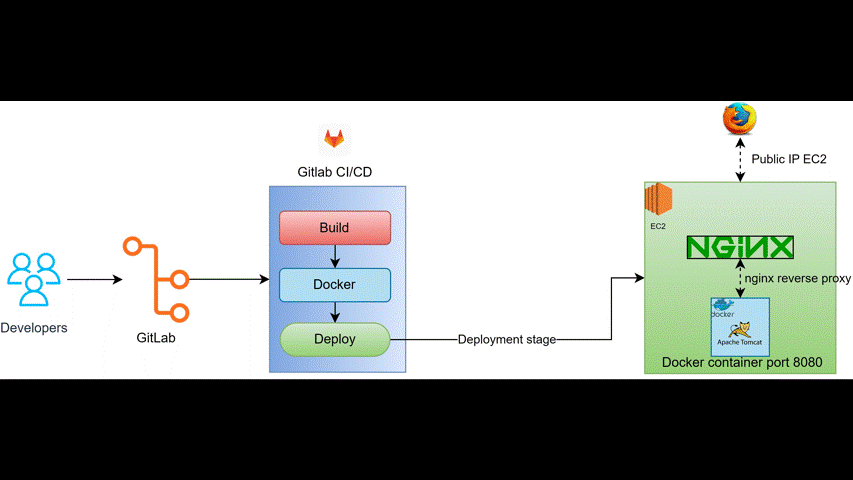

- Architecture Diagram

- Step 1: Prerequisites

- Step 2: Configuring GitLab as Version Control

- Step 3: Preparing AWS Resources

- Step 4: Building and Pushing the Docker Image

- Step 5: Setting Up Amazon ECS with Fargate

- Step 6: Creating the GitLab CI/CD Pipeline

- Step 7: Adding Monitoring with AWS CloudWatch

- Conclusion

- Author

In today’s fast-paced software development landscape, businesses face increasing pressure to deliver high-quality applications quickly and efficiently. Traditional development models often struggle to keep up with these demands, leading to bottlenecks, delays, and missed opportunities. DevOps, with its emphasis on collaboration, automation, and continuous integration/continuous delivery (CI/CD), offers a powerful solution to these challenges. This comprehensive guide will walk you through the process of building a robust CI/CD pipeline using GitLab CI/CD, Docker, and AWS Fargate, enabling you to automate your application deployments, improve scalability, and enhance overall development velocity. You’ll learn how to leverage these powerful tools to streamline your workflow, from code commit to production deployment, allowing you to focus on building innovative features rather than managing complex infrastructure.

Project Overview

This guide aims to demonstrate the creation of an automated CI/CD pipeline for a simple web application. By the end of this tutorial, you’ll have a fully functional pipeline that automatically builds a Docker image of your application, pushes it to an Amazon Elastic Container Registry (ECR) repository, and deploys it to AWS Fargate. This setup will enable seamless deployment, effortless scalability, and efficient monitoring of your application, showcasing the power and efficiency of a modern DevOps workflow. This hands-on experience will provide you with a practical foundation for building and deploying your own applications using this powerful technology stack. The practical application of this project is to demonstrate a real-world scenario of how CI/CD can be used to automate the deployment process. The benefits include: reduced manual intervention, faster deployments, improved reliability, and increased scalability. This streamlined approach allows developers to focus on code quality and feature development, leading to faster release cycles and improved product quality.

Technology Stack

We’ll be using the following technologies:

- GitLab: Our version control system and CI/CD platform. GitLab’s integrated CI/CD features make it a powerful and convenient choice for managing the entire software development lifecycle. (Source: GitLab’s 2022 Global DevSecOps Survey Report indicated increased adoption of GitLab for CI/CD.)

- Docker: For containerizing our application. Docker ensures consistency across different environments and simplifies deployment. (Source: Docker’s official website states that millions of developers use Docker to build and share containerized applications.)

- AWS Fargate: A serverless compute engine for containers. Fargate eliminates the need to manage servers, allowing us to focus on our application. (Source: AWS reports that Fargate reduces operational overhead by up to 70%.)

- Amazon ECR: A fully-managed Docker container registry that makes it easy to store, manage, and deploy Docker container images. (Source: AWS documentation highlights ECR’s security and scalability benefits.)

- AWS CloudWatch: For monitoring our application and infrastructure. CloudWatch provides valuable insights into application performance and health. (Source: AWS CloudWatch documentation emphasizes its real-time monitoring capabilities.)

Each tool plays a crucial role in creating a robust, automated, and scalable CI/CD pipeline. The combination of these technologies provides a powerful and efficient workflow for modern software development.

The diagram illustrates the flow of the CI/CD pipeline: Code changes pushed to GitLab trigger the CI/CD pipeline. The pipeline builds a Docker image of the application, pushes the image to Amazon ECR, and then deploys the image to AWS Fargate. This automated process ensures consistent and reliable deployments with minimal manual intervention.

Step 1: Prerequisites

Before you begin, make sure you have the following:

- A GitLab account: If you don’t have one, you can create a free account on GitLab.com.

- An AWS account: You’ll need an AWS account to use ECR and Fargate. AWS offers a free tier that you can use for this tutorial.

- Docker Desktop: Install Docker Desktop on your local machine to build and test Docker images.

- AWS CLI: Install and configure the AWS CLI on your local machine to interact with AWS services. Make sure you have the necessary credentials configured.

- A Node.js application (or any application you wish to deploy): For this tutorial, we will use a simple Node.js application. You can use an existing application or create a new one.

Having these prerequisites set up correctly will ensure a smooth and efficient process throughout the tutorial. It is essential to verify that all configurations are working as expected before proceeding to the next steps.

Step 2: Configuring GitLab as Version Control

2.1: Fork the Repository

- Navigate to the project repository on GitLab.

- Click the “Fork” button in the top right corner.

- Choose a namespace for your forked repository.

- Click “Fork project.”

Example screenshot showing the fork button on GitLab. Common issues: Ensure you’re logged into your GitLab account. If you encounter permission issues, verify your access rights to the original repository.

Example screenshot showing the fork button on GitLab. Common issues: Ensure you’re logged into your GitLab account. If you encounter permission issues, verify your access rights to the original repository.

2.2: Clone the Repository

- Open your terminal.

- Navigate to the directory where you want to clone the repository.

- Run the command

git clone <repository_url>, replacing<repository_url>with the URL of your forked repository.git clone https://gitlab.com/<your_username>/<your_repository>.gitThis command will download the repository to your local machine. You can then make changes and push them back to GitLab.

2.3: Push Updates (Optional)

If you made changes to the code:

- Navigate to the repository directory on your local machine.

- Stage the changes:

git add . - Commit the changes:

git commit -m "Your commit message" - Push the changes to your forked repository:

git push origin main

Pushing updates to your forked repository ensures that your changes are tracked and available for the CI/CD pipeline. This step is crucial for triggering automated builds and deployments whenever you make code modifications.

Step 3: Preparing AWS Resources

3.1: Create an Amazon ECR Repository

- Log into the AWS Management Console.

- Navigate to the Elastic Container Registry (ECR) service.

- Click “Create repository.”

- Enter a repository name (e.g., “my-app-repo”).

- Click “Create repository.”

Example screenshot showing the ECR creation page on AWS.

Example screenshot showing the ECR creation page on AWS.

3.2: Authenticate Docker with ECR

- Open your terminal.

- Run the AWS CLI command

aws ecr get-login-password --region <your_region> | docker login --username AWS --password-stdin <your_account_id>.dkr.ecr.<your_region>.amazonaws.com, replacing<your_region>and<your_account_id>with your AWS region and account ID, respectively. This command retrieves a login password and uses it to authenticate Docker with your ECR registry.

3.3: Create an ECS Cluster

- Navigate to the Elastic Container Service (ECS) in the AWS Management Console.

- Click “Clusters.”

- Click “Create Cluster.”

- Choose “Networking only” and give your cluster a name (e.g., “my-app-cluster”).

- Click “Create.” Choosing “Networking only” is recommended for use with Fargate, as it simplifies cluster management.

3.4: IAM Role, VPC, and Security Groups

- IAM Role: Create an IAM role that allows ECS to access ECR and other necessary AWS resources. Attach the

AmazonEC2ContainerRegistryPowerUserandAmazonECS_FullAccesspolicies to this role. This role is crucial for granting the necessary permissions for your ECS tasks to pull images from ECR and operate within your AWS environment. - VPC: You will need a VPC for your Fargate tasks to run in. If you don’t have an existing VPC, create a new one with appropriate subnets and routing. The VPC provides a secure and isolated network environment for your application.

- Security Groups: Create security groups to control inbound and outbound traffic to your Fargate tasks. Configure the security groups to allow traffic on the ports your application uses. Properly configured security groups are essential for protecting your application from unauthorized access.

Step 4: Building and Pushing the Docker Image

4.1: Build the Docker Image

- Create a

Dockerfilein the root directory of your application. - Add the following instructions to your

Dockerfile:

FROM node:16

WORKDIR /appCOPY package*.json ./RUN npm installCOPY . .EXPOSE 3000CMD ["npm", "start"]This Dockerfile defines the environment for your application, copies the necessary files, installs dependencies, and starts the application. Understanding the Dockerfile is crucial for customizing it based on your application’s specific requirements.

- Open your terminal, navigate to the root directory of your application, and run the command

docker build -t <your_account_id>.dkr.ecr.<your_region>.amazonaws.com/<your_repository_name>:<tag> .replacing placeholders with your specific details. This command builds the Docker image and tags it with the ECR repository URI.

4.2: Tag the Docker Image

Tagging the Docker image is crucial for identifying and managing different versions of your application within ECR. Use meaningful tags, such as version numbers or release names, to easily distinguish between images.

4.3: Push the Image to ECR

Run the command docker push <your_account_id>.dkr.ecr.<your_region>.amazonaws.com/<your_repository_name>:<tag> to push the tagged image to your ECR repository. This step makes the image available for deployment to Fargate. Common issues: Check Docker authentication status if push fails. Verify network connectivity and ECR repository permissions.

4.4: Verify the Image

- Log into the AWS Management Console.

- Navigate to the ECR service.

- Check that the image has been successfully pushed to your repository. Verifying the image in ECR ensures that it is readily available for deployment and helps identify any potential issues during the build and push process.

Step 5: Setting Up Amazon ECS with Fargate

5.1: Create a Cluster (Covered in Step 3.3)

5.2: Define a Task Definition

- Navigate to the ECS service in the AWS Management Console.

- Click “Task Definitions.”

- Click “Create new Task Definition.”

- Choose “Fargate.”

- Configure the task definition:

- Task Definition Name: Provide a descriptive name (e.g., “my-app-task”).

- Container Definitions:

- Container Name: Give your container a name (e.g., “my-app-container”).

- Image: Specify the URI of your Docker image in ECR.

- Port Mappings: Map the port your application listens on (e.g., 3000).

- Resource allocation: Configure the desired CPU and memory for your task.

- Network mode:

awsvpcClick “Create.”

5.3: Create a Service to Manage the Task

- Navigate to your ECS cluster in the AWS Management Console.

- Click “Services.”

- Click “Create.”

- Configure the service:

- Launch type:

FARGATE - Task definition: Choose the task definition you created in the previous step.

- Number of tasks: Specify the desired number of tasks (e.g., 1).

- Network configuration:

- Cluster VPC: Select your VPC.

- Subnets: Select your subnets.

- Security groups: Select your security groups.

- Auto-assign public IP:

ENABLED

- Load balancing: Configure a load balancer if needed. Click “Create Service.”

- Launch type:

5.4: Test the Application

- After the service is created, navigate to the “Tasks” tab.

- Find your running task and copy its public IP address.

- Open your web browser and navigate to the public IP address on the port your application is listening on (e.g.,

http://<public_ip_address>:3000). Testing the application verifies that the deployment was successful and that the application is running as expected. If you encounter issues, review the logs in CloudWatch for troubleshooting.

Step 6: Creating the GitLab CI/CD Pipeline

6.1: Add .gitlab-ci.yml

Create a file named .gitlab-ci.yml in the root directory of your project. Add the following configuration:

image: python:latest

stages:builddeployvariables:

AWS_REGION: <your_region>

ECR_REPOSITORY: <your_repository_name>

ECS_CLUSTER: <your_cluster_name>

ECS_SERVICE: <your_service_name>

AWS_ACCOUNT_ID: <your_account_id>before_script:pip install awsclibuild:

stage: build

script:

- docker build -t $AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/$ECR_REPOSITORY:$CI_COMMIT_SHORT_SHA .

- aws ecr get-login-password --region $AWS_REGION | docker login --username AWS --password-stdin $AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com

- docker push $AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/$ECR_REPOSITORY:$CI_COMMIT_SHORT_SHAdeploy:

stage: deploy

script:

- aws ecs update-service --cluster $ECS_CLUSTER --service $ECS_SERVICE --force-new-deployment --region $AWS_REGIONReplace the placeholder values with your specific AWS details. This .gitlab-ci.yml file defines the CI/CD pipeline. It specifies the stages, variables, and scripts required to build and deploy the application. Each stage performs a specific set of actions, ensuring that the application is built, tested, and deployed automatically. The use of variables allows for customization and flexibility.

6.2: Configure Variables in GitLab

- Navigate to your project’s settings in GitLab.

- Go to “CI/CD” and expand “Variables.”

- Add the following variables, ensuring their values match your AWS configuration:

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYStoring sensitive information as environment variables in GitLab ensures the security of your credentials and prevents them from being exposed in your codebase.

Step 7: Adding Monitoring with AWS CloudWatch

7.1: Set Up CloudWatch Logs

Ensure your application logs are being sent to CloudWatch. You can configure this within your application code or through AWS Fargate settings. CloudWatch Logs provide a centralized location for storing and analyzing log data from your application, which is essential for troubleshooting and monitoring application health.

7.2: Set Up Alarms for Monitoring

- Navigate to the CloudWatch service in the AWS Management Console.

- Click “Alarms” and then “Create alarm.”

- Choose a metric to monitor (e.g., CPUUtilization).

- Configure the alarm threshold and notification settings. Creating alarms in CloudWatch allows you to proactively monitor critical metrics and receive notifications when predefined thresholds are exceeded. This helps identify potential issues early on and maintain the health and performance of your application. Consider setting alarms for CPU usage, memory usage, and request latency.

Conclusion

This guide provides a comprehensive walkthrough of building a robust CI/CD pipeline using GitLab CI/CD, Docker, and AWS Fargate. By implementing these steps, you can automate your application deployments, improve scalability, and enhance your overall development workflow. This approach significantly reduces manual effort, increases deployment frequency, and allows you to deliver high-quality software faster. Experiment with additional features like automated testing and database migrations to further enhance your pipeline.